AI Joins The Battlespace - Issue #18 of 2023

Peter Thiel's CIA-backed big data firm announces a military AI platform to reshape the battlefield...

AI in the Battlespace

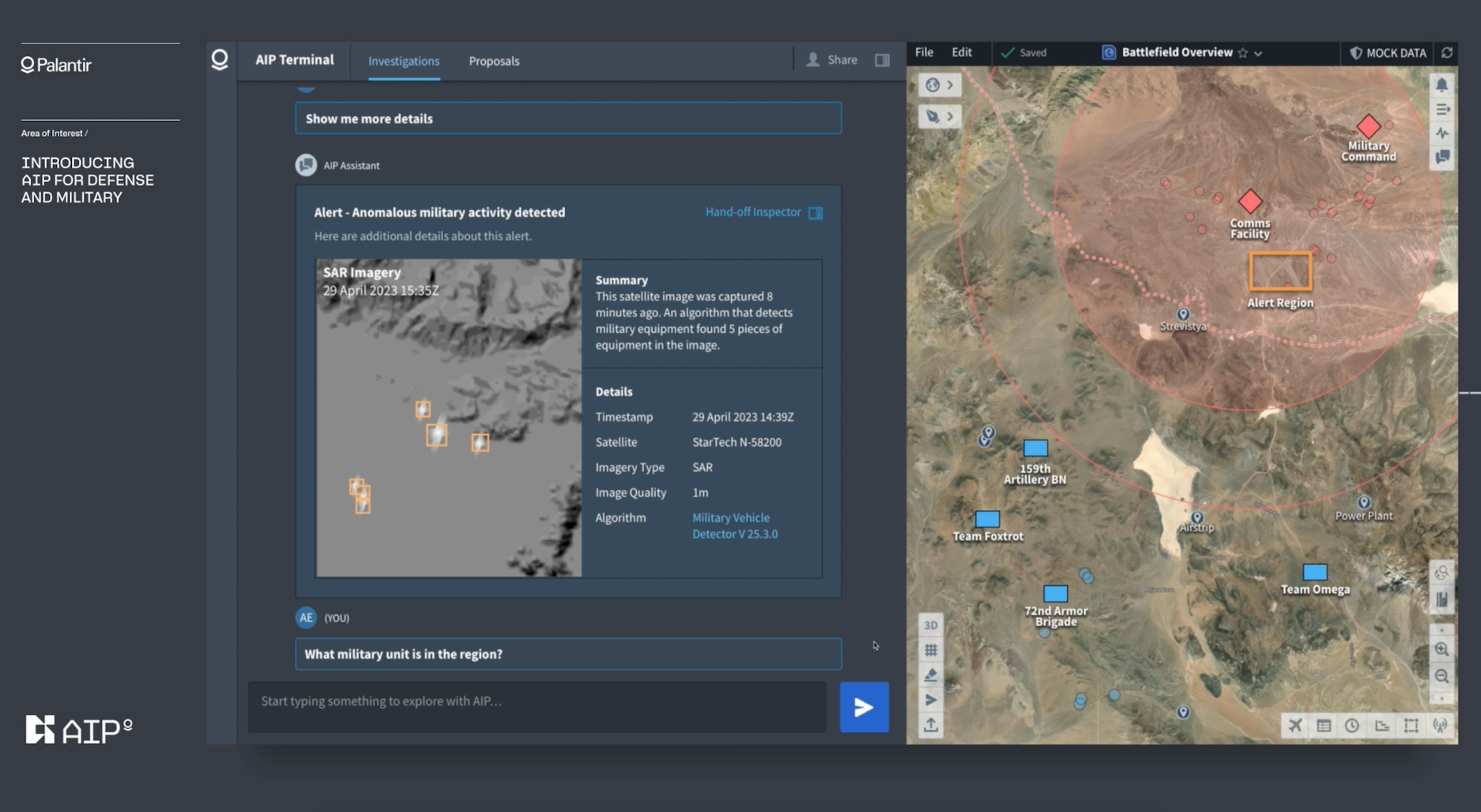

Palantir – the big data operation founded by Peter Thiel and funded largely by the CIA – recently announced its Palantir Artificial Intelligence Platform (AIP) for military 'defense' use and published a demo of the AI terminal engaging a notional enemy armor element in a hypothetical conflict in "eastern Europe." (The demo is obviously just a model of how AIP would engage the Russian military in Ukraine.) The platform uses large language modeling and other AI to facilitate efficiency-optimized warfighting.

For anyone unaware, Palantir cut its teeth in the Patriot Act GWOT-era, serving as a darling of the DOD, USIC, and domestic surveillance state. The company and its tools collect massive amounts of data for the benefit of military and law enforcement applications.

The data obtained by Palantir's systems come together to create a "full data ecosystem," where info that may previously have been uncollected, uncorrelated, or siloed is gathered, combined, and reconciled, then assessed by AI to create meaningful visualizations and insights that (ostensibly) facilitate faster and higher-quality decision making by officers in conflicts.

Regarding maneuver warfare, Palantir AIP basically seeks to hack the OODA loop and enable military commanders and leading-edge operators to arrive at the best decisions in complex circumstances faster than enemy commanders while avoiding the 'tactical tunnel vision' that human decision-makers are susceptible to.

In the demo video, the AIP terminal appears to do a good job of unifying situational awareness and eliminating friction or loss of fidelity in the decision-making chain. It's what the early-GWOT FBCB2 'Blue Force Tracker' systems tried to do, except now it's fully realized.

If you watch or learn nothing else about AI, you should take 8 minutes to watch this video:

I think this is a mixed bag. On one hand, military AI development and implementation is a global arms race, and the US should be mindful of that. We do need to be first to the finish, if for no reason other than self-preservation. The proxy war with Russia has made it clear that America's most advanced systems can have an outsized impact against militaries running on (mostly) legacy tanks, planes, and communication systems. Having the best tools matters; it would not be a good policy to cede primacy in AI to China (or anyone else).

On the other hand, AI is destined to fail if the military attempts to use it to outsource the work of leaders to bureaucrats – which it probably will.

There is a Patton quote, "No good decision was ever made in a swivel chair," and AIP looks like a fast way for many more decisions to be made by swivel chair operators.

I look at this demo and ask myself, Would the outcome have been different if the US had Palantir AIP at the start of GWOT? I'm not confident the answer is yes.

AIP basically looks like AI-powered CRM software for the military. Sure, AIP can probably do a decent enough job of helping to administer C2 or logistics. But the American military doesn't really have a problem with the command and control side of things. And the US military kept troops in Afghanistan supplied, fed, trained, housed, cared for, and rotated for twenty years in some of the most unforgiving pieces of terrain on earth. So what problem, exactly, is AIP solving?

Let's look back and review America's experience in Afghanistan.

America routinely won her tactical-level engagements in Afghanistan, and neither the Taliban nor Al-Qaeda ever had the kinetic ability to push US forces out of the country. The military faced no real limits on freedom of maneuver and dealt with no meaningful scarcity in terms of logistics.

But as we learned in the Afghanistan Papers, the military was basically just there to stand around; it definitely wasn't there after the invasion to impose any type of will on the enemy (whoever that was). After the invasion, the only way to 'win' would have been to kill a huge swath of Afghanistan's male population (Lindy, by the way - Lee). The military had the capability to do exactly that, of course, but killing people in a war can get you in trouble these days. You may even remember that the military briefly considered creating a medal for showing restraint in combat.

And so, overseeing the war became something of a liability. There was no clear purpose; a lot of American soldiers were dead, a lot of Afghan civilians were dead, billions were being spent, and 'democracy' was definitely not spreading. No one wanted to be the last person to touch it, so it dragged on for years and years longer than needed. And when it did finally draw to an end, it was an unmitigated disaster that resulted in avoidable loss of life and global humiliation.

So why did this happen? Largely because GWOT was run by bureaucrats. Yarvin correctly points out that bureaucracies exist to diffuse responsibility away from individual actors; bureaucrats are always "just following orders" and are never taking individual responsibility for the outcome of a situation. Bureaucracies allow a political party to hire friends – qualified or not – and give them a steady paycheck without ever having to fire them for poor performance or be embarrassed by any single person making a public-facing error.

If you don't agree with me, consider that no US general oversaw a final and decisive victory during GWOT, but two of them – McChrystal in 2010 and Mattis in 2013 – were fired for criticizing the bureaucracy. No one was relieved for losing the war, only for criticizing the hive mind of the American deep state.

And to further prove my point, also consider that the commanders and generals who spent twenty years achieving null outcomes in combat are now highly-paid consultants and "experts" in counter-insurgency on cable news segments.

With this considered, it seems obvious to me that adding AI to the battlespace will facilitate three main outcomes, and none of them will involve winning wars:

- AIP will be another cost driver for military-industrial complexity that taxpayers will be pay-pigged into bending over for.

- The software will serve as an additional bureaucratic layer, further diminishing responsibility away from any one of our leaders for inevitably losing tomorrow's wars.

- The FBI will inevitably adopt battlespace AI in a program that exploits Ring doorbells and Alexa devices to detect when middle-class homeowners utter the phrase "Let's go, Brandon," during backyard cookouts and will automatically task an MQ-9 Reaper drone with hitting the homeowner with an RX9 'Flying Ginsu' Hellfire in the name of public safety when this happens.

My point with all this is that I can see AIP being used for everything under the sun except what it should be used for. Winning wars means killing a lot of bad guys, and killing lots of bad guys isn't "spreading democracy." Much of the American mindset is centered on not losing rather than decisively winning. This much is even apparent in the marketing materials:

Palantir makes a big point of mentioning the "guardrails" built into the system, which prevent things from getting dicey in terms of rule-following. One of the last things a ground commander should be hung up on is the legal, regulatory, and ethical risks of winning a fight. I've joked a lot about the military being a weaponized HR department, but it's in plain text here.

The military should exist to win wars. There are only a few ways to win a war (they all involve doing things that look bad on the evening news), and I don't think outsourcing combat leadership to a software suite focused on "mitigating legal, regulatory, and ethical risks" is one of them. By definition, this is playing not to lose instead of playing to win. It's like we learned nothing from Afghanistan.

There's a philosophic recursion problem in emphasizing that your warfighting software system is "compliant." It really shows one's commitment to corporate HR rather than one's country. Compliant? Compliant with what? We're fighting a war – trying to kill people. If we're the ones fighting the war, then everything we do is compliant because we set our own warfighting policies. We're talking about a system built on the premise that machines can make their own decisions about how to conduct warfare, and it's being marketed as "responsible" and "compliant"? Give me a break.

Currently, America can deploy ground forces anywhere in the world in 18 hours and supply them indefinitely with beans, bullets, and band-aids. But our military is trapped in the mindset of "mitigating legal, regulatory, and ethical risk," and so we use our extensive force projection and sustainment capability to perpetuate stalemates – stalemates that would easily be broken if our flag officers could muster the aggregate fighting spirit of Patton's left nut between them.

And so if you want to have super advanced AI software systems on the battlefield, it's probably okay, as long as these systems are used to complement well-trained infantrymen and commanders who see victory as their own personal responsibility. But if we create a military where soft-bodied rejects try to win ground conflicts from AI workstations wired into air-conditioned conex boxes, we are going to be in for a rude awaking.

A military can be given all the data in the world and still very efficiently lose a war. As the saying goes: "knowing is half the battle, and the other half is extreme violence." The information is meaningless unless the military is willing and capable of decisively acting on the information gleaned from big data analytics.

No matter how smart AI gets, a country will always need groups of young men physically fit enough to sprint up the side of a mountain and mentally rugged enough to stab their enemies with bayonets once they reach the top. You can win a war without AI, but you can't win a war without these guys.

So I hope that Palantir AIP works out for the best. But America's recruiting crisis and current low standards of physical fitness seem to be more urgent problems. Hopefully, someone smart somewhere understands that AIP is a nice-to-have, not a need-to-have.

Related:

Other Stories

Michael Lind on Labor

RealClear posted this week an excerpt from Michael Lind's Hell to Pay: How the Suppression of Wages Is Destroying America. It's worth reading. I'm not necessarily aligned with Lind on everything, but many of his criticisms of the current American labor and wage model ring very true.

It turns out that when the money is fake, a lot of other things – like credentials – are fake, too.

Read More:

RFK on IM-1776

There's been some interest in dissident circles about Robert Kennedy Jr.'s presidential campaign. This Kennedy family member is notable in that he's focused on physical health and wellness and is largely anti-vaccine and anti-big pharma.

I don't think there's much chance of him gaining anything from electoral politics, but he's an interesting personality. He did a short interview with IM-1776 and detailed some of his positions; the link is below if you're interested. There's some possibility RFK may serve as a spoiler candidate in the 2024 election, so it's something to keep an eye on.

Read More:

WhatsApp Open Mic Problem

If you are a WhatsApp user, give this a read. The app is eavesdropping on you through your phone's microphone even after you've closed it. Users discovered and verified this problem; no official statement from WhatsApp at the time of this writing.

Read More: